Real estate data normalization is critical for transforming messy, inconsistent property data into a standard format. Without it, professionals face issues like inaccurate valuations, missed opportunities, and compliance risks. Here’s a quick breakdown of the biggest challenges:

- Fragmented Sources: Data comes from MLS, public records, private datasets, etc., with varying formats and schemas.

- Inconsistent Quality: Typos, missing fields, duplicates, and outdated records skew analysis.

- Timing Issues: Data updates at different intervals, leading to gaps in accuracy.

- Complex Transformation: Manual processes dominate, making standardization slow and error-prone.

Solutions include using industry standards, automation, and AI tools to streamline the process. For example, platforms like BatchData offer APIs and enrichment services to clean and unify data efficiently. Companies that address these issues can make faster decisions and improve data reliability. Ignoring them leads to inefficiency, higher costs, and reduced confidence in analytics.

The Challenge of Consistency: Real Estate Data and Market Indices

Common Real Estate Data Normalization Challenges

To understand why achieving consistent and reliable data integration in real estate can be so challenging, let’s dive into the specific hurdles professionals face when normalizing data. These obstacles highlight the need for tailored solutions, which we’ll explore in later sections.

Fragmented Data Sources and Schema Differences

One of the biggest challenges in real estate data normalization stems from juggling multiple data sources with incompatible formats. The same attribute might be labeled differently across systems, forcing manual intervention to make sense of it all.

Take the typical real estate data landscape: Multiple listing service (MLS) systems often rely on proprietary formats, public records follow county-specific government standards, and private databases define fields in their own way. This patchwork of formats creates a tangled web of data that’s hard to integrate.

Even something as seemingly straightforward as address formatting can become a headache. For instance, public records might list a property as "123 Main Street, Unit 4A", while the MLS records it as "123 Main St #4A", and internal systems note it as "123 Main St., Apt 4A." Without normalization, these variations can lead to inflated property counts or skewed analyses.

On top of that, measurement units, date formats, and classification systems often differ between sources. For example, square footage might include basements in one database but exclude them in another. These inconsistencies make it tough to compare properties or analyze portfolios accurately.

Next, let’s look at how data quality and timing issues further complicate normalization efforts.

Data Quality, Completeness, and Timing Issues

Poor data quality is another persistent obstacle in real estate normalization. Missing owner names, incorrect property values, or duplicate listings can all throw off decision-making. Incomplete or outdated records, in particular, can lead to misvaluations and flawed market insights.

Timing discrepancies also create problems. Data sources update on different schedules – some in real-time, others weekly or monthly, and government records often lag by months. This lack of synchronization means recent transactions might appear in one system but not in another, making it difficult to maintain an accurate view of the market.

These timing and quality issues also affect predictive analytics. Machine learning models trained on incomplete or inconsistent data may produce unreliable forecasts, undermining their value in decision-making.

Complex Data Transformation and Handling Unclear Records

The technical side of normalizing real estate data brings its own set of challenges. About 55% of real estate professionals still rely on manual data manipulation for reporting, highlighting the difficulty of automating these processes. Transforming data into a unified structure requires complex logic and meticulous validation, especially given the fragmented nature of the data.

Rent data offers a good example of this complexity. One lease might list monthly rent, another annual rent, and yet another weekly figures. Some leases factor in utilities and fees, while others don’t. To standardize this information, precise rules are necessary to ensure accurate comparisons.

Duplicate detection is another tough area. Properties often appear multiple times across platforms, with slight differences in addresses, descriptions, or identifiers. A strong deduplication process must account for abbreviations, misspellings, and formatting variations – while avoiding false matches that could merge distinct properties by mistake.

Missing identifiers or conflicting data from different sources frequently require manual review, slowing down the normalization process and increasing the risk of errors.

"BatchData pulls from verified sources and uses advanced validation to ensure data accuracy. With frequent updates and real-time delivery on select datasets, you’re always working with trusted information."

– BatchData

While thorough normalization can improve data quality, it can also slow down system performance. Striking the right balance between accuracy and efficiency often requires custom solutions tailored to an organization’s specific needs.

These challenges – fragmented sources, poor data quality, timing issues, and technical hurdles – can lead to wasted time, higher costs, and reduced confidence in data-driven decisions. Tackling them systematically is essential for unlocking the full potential of real estate data.

Solutions to Real Estate Data Normalization Challenges

Tackling fragmented data and schema inconsistencies requires a structured approach. By adopting specific strategies, you can overcome these hurdles and streamline your data normalization process. Below, we’ll dive into practical solutions that address issues like fragmented sources, inconsistent quality, and technical barriers.

Using Industry Data Standards and Governance

One effective way to address data inconsistencies is by leveraging frameworks like the OSCRE Industry Data Model™. This model establishes a shared language and structure for real estate data, making it easier for different platforms to exchange and integrate property information seamlessly.

A strong governance framework is equally important. This involves setting clear rules for data ownership, validation processes, and audit trails to maintain data accuracy and comply with U.S. regulations. Governance protocols should define who owns the data, how it’s formatted, and how updates are managed.

Another key step is creating unified data templates. Instead of juggling multiple formats, a master template can map various schemas into a standardized structure. For example, such a template could standardize how square footage is calculated – ensuring consistency in whether basement space is included or excluded, which helps eliminate valuation discrepancies across markets.

Using Automation and AI for Data Enrichment

Once standardized frameworks are in place, automation and AI can take the normalization process to the next level. These tools simplify tasks like data ingestion, validation, and transformation, reducing manual effort and improving speed.

AI-powered tools are particularly useful for extracting structured data from unstructured sources. Take lease documents, for instance – AI can digitize handwritten notes or varied formats, identify discrepancies like conflicting square footage or lease dates, and standardize the information. This is a game-changer, especially since 55% of real estate companies still rely on manual methods for data reporting.

Automation can also validate data by flagging errors, verifying addresses, and cross-referencing information. Centralized platforms equipped with automation capabilities can aggregate data from multiple sources in real time, ensuring consistent formatting and immediate access to normalized datasets for reporting and analysis.

BatchData‘s Role in Supporting Normalization

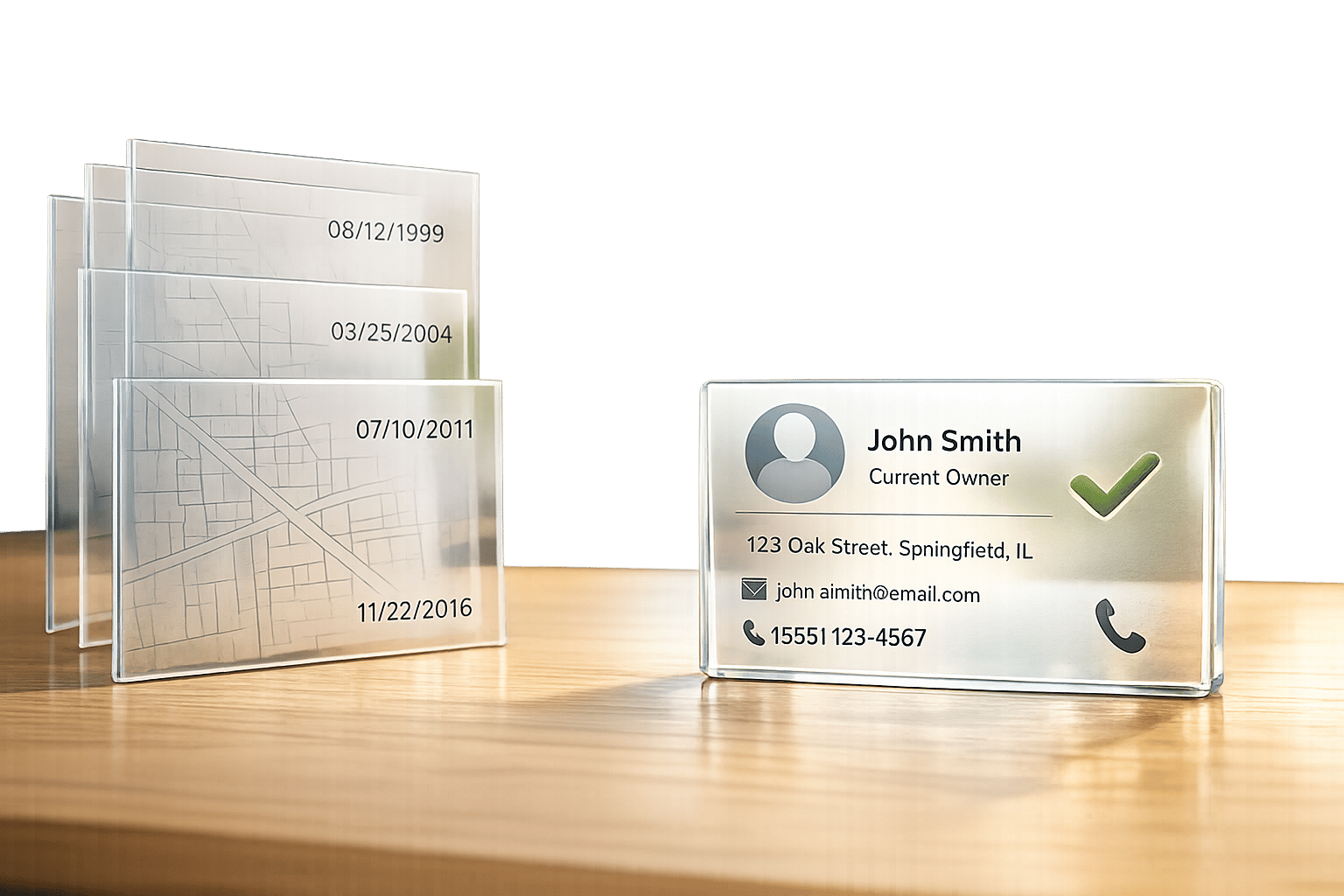

BatchData offers tools that complement standardized frameworks and AI technologies, addressing many of the challenges tied to normalization. With a database of over 155 million properties and 800+ attributes, BatchData provides a strong foundation for creating consistent, normalized data.

The Property Search API simplifies the process by combining property details, ownership information, market data, and analytics into a single request. This eliminates the need to manage fragmented sources and resolves schema inconsistencies.

For enhancing data quality, BatchData’s contact enrichment and skip tracing services fill in incomplete records by adding verified contact information. With a 76% accuracy rate for reaching property owners – three times higher than industry averages – these tools ensure your database is actionable and reliable.

BatchData also offers APIs, bulk data options, and professional services to seamlessly integrate normalized data into your systems. This minimizes manual data transfers and errors. Plus, their pay-as-you-go pricing model allows you to scale your efforts without hefty upfront costs, making these tools accessible for businesses of all sizes.

"BatchData pulls from verified sources and uses advanced validation to ensure data accuracy. With frequent updates and real-time delivery on select datasets, you’re always working with trusted information."

– BatchData

sbb-itb-8058745

Matching Challenges to Solutions

Tackling normalization challenges head-on requires aligning specific problems with practical solutions. The table below breaks down common hurdles, their corresponding fixes, and the resulting business benefits.

| Challenge | Solution | Business Impact |

|---|---|---|

| Fragmented data sources & schema differences | Industry data standards, unified templates, centralized platforms | Quicker integration, fewer manual errors, consistent reporting across properties |

| Data quality & completeness issues | Automated validation, data enrichment services, centralized platforms | More accurate financial analysis, smarter decision-making, reduced investment risks |

| Complex data transformation | AI-powered extraction tools, automated mapping, professional services | Lower manual workload, faster reporting, no formatting delays |

| Manual reporting & data silos | Automation tools, API integration, real-time data aggregation | Better operational efficiency, real-time insights, reduced time spent on data tasks |

| Unclear or duplicate records | Address normalization APIs, contact enrichment, skip tracing services | Fewer duplicates, more reliable analytics, improved contact accuracy |

These solutions illustrate how addressing normalization challenges transforms raw, unstructured data into actionable insights. Ignoring these issues leads to inefficiencies and risks. Poor data quality can result in costly mistakes, compliance problems, and missed opportunities, while slow reconciliation processes can delay crucial decisions. Furthermore, unreliable data undermines stakeholder confidence, compounding the financial impact over time.

BatchData offers tools specifically designed to tackle these challenges. For instance, their Property Search API consolidates fragmented property details, ownership information, market data, and analytics into a single, standardized request. This not only resolves fragmentation but also addresses data completeness. Similarly, their contact enrichment and skip tracing services turn unclear or incomplete records into reliable, actionable insights.

Timing is critical when adopting these solutions. Companies relying on manual processes risk falling behind as market conditions evolve. With 55% of real estate businesses still using manual data reporting methods, those who embrace automation gain a competitive edge by responding faster and making more accurate decisions.

The pay-as-you-go pricing model removes barriers to adoption, allowing companies to test solutions on specific challenges without committing to large-scale transformations upfront. This approach reduces risk, proving value to stakeholders while scaling normalization efforts as needed.

Tracking success metrics is essential to gauge the impact of these solutions. Metrics like shorter reporting cycles, reduced validation efforts, and improved financial reporting accuracy highlight the benefits of addressing normalization challenges systematically. By aligning challenges with targeted solutions, businesses can build a solid foundation for reliable, actionable real estate data.

Conclusion: The Path to Actionable Real Estate Data

Getting your data in order is the backbone of making confident, informed decisions in real estate. When data comes from too many fragmented sources or lacks quality, it can lead to missed opportunities, incorrect valuations, and higher risks – none of which inspire confidence among stakeholders.

The struggle is real. Many professionals still wrestle with consolidating data and relying on manual reporting, as statistics reveal. This creates a clear edge for those who adopt advanced tools to streamline their data processes. Companies sticking with outdated methods risk falling behind as the market evolves.

A great example of overcoming these hurdles is Singerman Real Estate. This Chicago-based investment firm tackled persistent data fragmentation by adopting a comprehensive normalization platform. The result? Better data quality, greater transparency, and smarter decision-making. Their success underscores the measurable advantages that automated data normalization can bring to the table.

Automation and AI-powered tools are game-changers for normalization challenges. They provide scalable systems that not only solve current issues but also lay the groundwork for future growth. With BatchData’s extensive property datasets, organizations can turn raw data into actionable insights. Tracking improvements like fewer duplicate records, quicker integrations, and sharper decision-making makes it easier to justify these investments and refine strategies over time.

The real estate industry is at a turning point. Companies that commit to robust data normalization today are setting themselves up for long-term success. On the flip side, those who wait risk falling into inefficiency and losing their competitive edge. BatchData’s comprehensive normalization solutions are here to help transform your data into a real strategic advantage.

FAQs

How can I address fragmented data sources when normalizing real estate data?

Fragmented data sources are a frequent hurdle in real estate data normalization, but there are effective ways to tackle this issue. Begin by bringing all your data into a central repository. This ensures every piece of information is stored in one place, making it more accessible and manageable. To simplify analysis, use standardized formats for key fields like property addresses, dates, and numerical values.

You can also use advanced tools or platforms to enhance and integrate your data. For example, BatchData provides services like property and contact data enrichment, skip tracing, and APIs for property searches. These tools can help fill in missing details and boost overall accuracy. By combining these approaches, you can transform fragmented data into a cohesive, dependable dataset tailored to your real estate needs.

How do automation and AI tools improve real estate data normalization?

Automation and AI tools simplify the process of normalizing real estate data by improving accuracy, maintaining consistency, and boosting efficiency. These tools can automatically detect and fix discrepancies in property records, enhance datasets with additional insights, and merge information from various sources effortlessly.

Key features like property search APIs, contact data enrichment, and bulk data processing make managing large datasets far less labor-intensive. With automation and AI, businesses can rely on well-organized, dependable data to make quicker, more informed decisions.

What are the risks of using manual processes for data normalization in the fast-paced real estate market?

In the fast-paced world of real estate, relying on manual data normalization comes with its share of challenges. It can lead to inconsistent outcomes, wasted time, and avoidable human errors. These problems can distort property information, making it tougher to spot trends or make well-informed decisions.

On top of that, as real estate data continues to expand, manual methods quickly become impractical. They can slow down essential updates and undermine the reliability of your data. Turning to automated solutions offers a way to simplify workflows and maintain accuracy, even as the data scales.