The U.S. real estate market is transforming through proptech – tools and platforms that streamline property data, valuations, underwriting, and analytics. Developers and product teams face mounting pressure to integrate data efficiently, ensure clean pipelines, and deliver features at unprecedented speeds. Here’s what you need to know:

- Proptech’s Core: It revolves around data – property attributes, ownership records, valuations, and financial indicators. Fast, reliable data integration is the backbone of successful proptech products.

- Developer Experience (DX): Superior DX is critical. Clear documentation, interactive API testing, and quick onboarding help developers move from concept to production in days, not weeks.

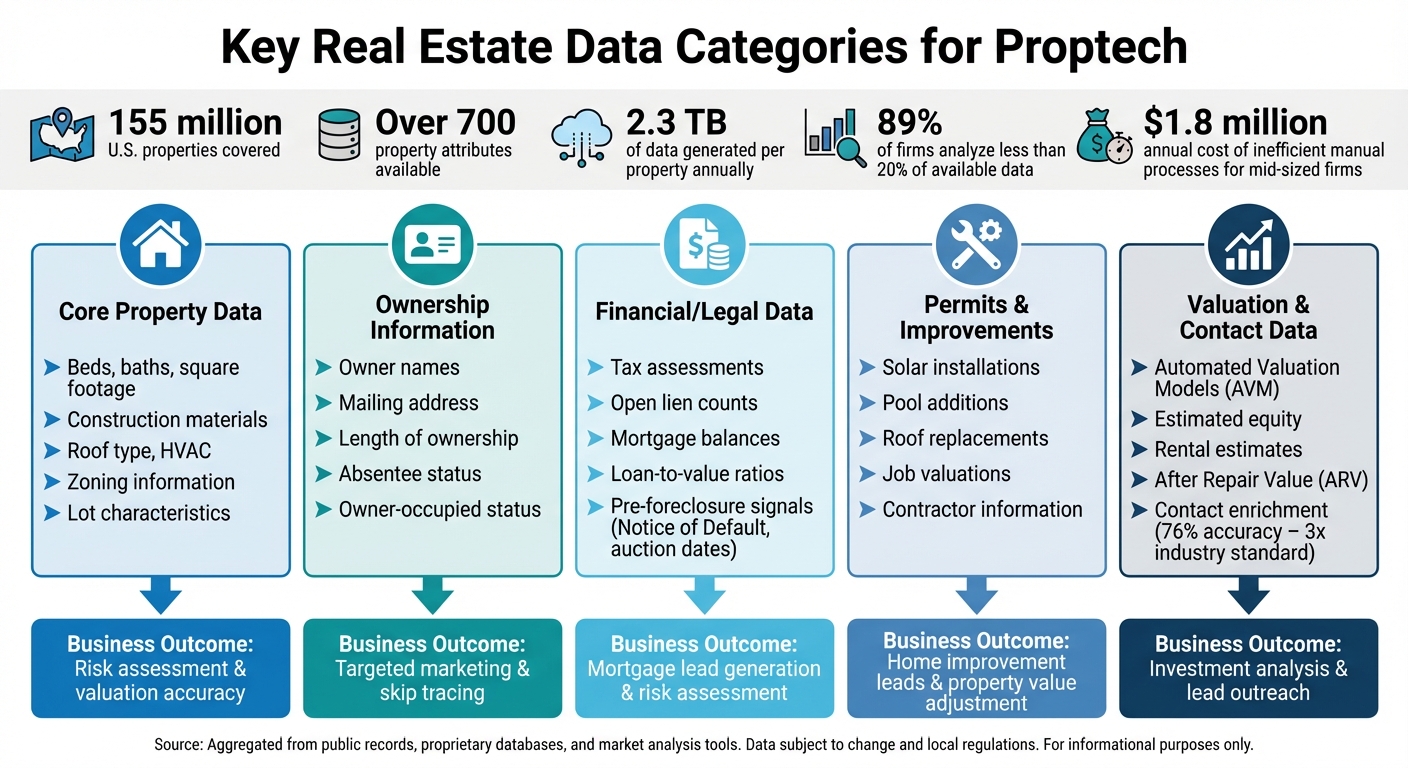

- Data Categories: Proptech relies on core property data, ownership info, financial details, permits, and valuations. These datasets fuel tools like AVMs, lead scoring, and risk assessments.

- Scalable Systems: Modern architectures use APIs for real-time data and cloud platforms like Snowflake for bulk delivery. Caching, asynchronous loading, and modular designs ensure performance and scalability.

- Emerging Trends: AI agents, digital twins, and predictive analytics are shaping the future. Flexible systems and multi-source resilience will keep platforms competitive.

To stay ahead, focus on clean data, efficient developer tools, and scalable infrastructure. This approach allows faster feature releases, better user experiences, and the ability to adapt to proptech’s evolving demands.

Understanding Proptech Data Intelligence

Key Real Estate Data Categories and Their Business Applications in Proptech

Creating effective proptech products begins with a deep understanding of the data domains that shape U.S. real estate decisions. With the U.S. proptech market projected to hit $25 billion in 2024, the industry is poised for rapid growth. This momentum is driven by developers who know how to harness detailed property data to build tools that enable quicker valuations, smarter investments, and more precise lead generation.

Each property generates a staggering 2.3 TB of data annually, yet 89% of firms analyze less than 20% of this data. For developers, this presents a huge opportunity to design solutions that can drive meaningful business results. Inefficient manual processes cost mid-sized firms around $1.8 million every year, an issue that well-constructed data systems can address.

Key Real Estate Data Categories

U.S. property data can be grouped into several essential categories, each serving specific purposes in proptech applications.

Core property data includes details like the number of bedrooms and bathrooms, square footage, lot characteristics, zoning information, and construction specifics such as roof type and HVAC systems. This data is crucial for accurate valuations and risk assessments.

Ownership information focuses on owner names, mailing addresses, ownership duration, and whether the property is owner-occupied or absentee-owned. This data is vital for targeted marketing and identifying potential sellers.

Financial and legal data includes tax assessments, open liens, mortgage balances, loan-to-value ratios, and pre-foreclosure signals like Notices of Default and auction dates. These insights help developers create tools for identifying distressed properties and evaluating financial risks.

Permits and improvements provide details about renovation activities, including solar installations, pool additions, and roof replacements, along with job valuations and contractor information. This data supports property valuation adjustments and generates leads for home improvement services.

Valuation data covers Automated Valuation Models (AVM), estimated equity, and rental estimates like After Repair Value (ARV). Lastly, contact enrichment delivers verified contact details and reachability scores, often cross-checked against Do Not Call lists, achieving a 76% right-party contact accuracy – three times the industry standard.

| Data Category | Key Data Points | Business Outcome |

|---|---|---|

| Core Property | Beds, baths, square footage, construction materials, roof type, HVAC | Risk assessment, valuation accuracy |

| Ownership Info | Owner names, mailing address, length of ownership, absentee status | Targeted marketing, skip tracing |

| Financial/Liens | Tax assessments, open lien counts, mortgage balances, loan-to-value ratios | Mortgage lead generation, risk assessment |

| Pre-Foreclosure | Notice of Default, auction dates, unpaid balances | Identifying distressed investment opportunities |

| Permits | Solar, pool, roof permits, job values, contractor info | Home improvement leads, property value adjustment |

These data categories form the foundation for critical investment metrics.

Investment Metrics That Matter

To make informed real estate decisions, analytical tools must incorporate key investment metrics. Metrics like capitalization rate (cap rate) and Internal Rate of Return (IRR) are essential for comparing asset profitability and assessing long-term potential. Another key metric, Net Operating Income (NOI), serves as a primary measure of property performance. Real estate firms that use data analytics report NOI improvements of 8-12% within two years.

Other metrics include rent-to-price ratios, which highlight lucrative rental markets, and Loan-to-Value (LTV) ratios alongside estimated equity, which help identify high-equity owners or those in financial distress. After Repair Value (ARV) is especially critical for iBuyer models and fix-and-flip strategies. AI-powered tools can provide ARV assessments in under 60 seconds with accuracy rates above 92%. Firms using advanced analytics have reported a 34% improvement in investment decision accuracy and a 41% reduction in deal closure times.

For developers, these metrics are more than just numbers – they’re the building blocks for creating portfolio dashboards, automated underwriting systems, and predictive market intelligence tools.

Common Proptech Product Use Cases

By leveraging these metrics and data types, developers can create a variety of impactful proptech solutions.

Lead scoring and identification uses enriched data to pinpoint "motivated sellers" by analyzing factors like high equity, long ownership periods, and pre-foreclosure status. With APIs offering access to over 700 property attributes, businesses can uncover opportunities earlier than ever.

iBuyer models depend on accurate AVMs, ARV calculations, and market comparables to generate instant cash offers. These systems require real-time data pipelines capable of efficiently processing property details, recent sales, and neighborhood trends.

Portfolio analytics platforms aggregate data from thousands of properties to identify underperforming assets, forecast maintenance needs, and optimize capital allocation. AI-powered document analysis can achieve 96% accuracy in lease abstraction tasks while cutting review costs by 70%.

Mortgage and lending technology integrates financial data, credit indicators, and property valuations to automate underwriting processes. Similarly, property management platforms utilize permit data, ownership records, and contact details to streamline tenant communication and maintenance workflows. All of these applications rely on reliable data pipelines that ensure clean, consistent data, enabling faster feature development and quicker responses to market demands.

Creating Better Developer Experience (DX)

Developer experience (DX) is a critical factor in determining how efficiently your engineering team can transition from an idea to a working product. In proptech, where speed-to-market can make or break success, a smooth DX isn’t just a nice-to-have – it’s a game-changer. While over 80% of developers rank clear documentation as their top priority when choosing a new API, great DX encompasses much more than just well-written guides.

Leading proptech platforms focus on reducing the time-to-first-call – the time it takes for a developer to make their first successful API request after obtaining credentials. Modern tools achieve this through interactive documentation that allows developers to test API endpoints directly in their browser, complete with live responses. This approach slashes setup time from days to mere minutes. Additionally, multi-language SDKs simplify integration by letting developers copy, run, and adapt code almost instantly.

Another key feature is sandbox environments, which provide sample data for testing. These environments allow developers to experiment with authentication flows and error handling without risking production systems. This is particularly valuable in proptech, where clear and specific error codes – such as those flagging invalid listing formats or address validation issues – can save hours of debugging and avoid unnecessary support tickets. Streamlined authentication mechanisms like OAuth2, JWT, or simple API keys, paired with step-by-step guides, further reduce onboarding time from weeks to just a few hours. With these foundations in place, let’s explore what makes DX in proptech truly effective.

What Makes Good Developer Experience in Proptech

In proptech, great DX is built on a combination of features that simplify and accelerate development. Interactive testing tools and easily navigable documentation help engineers validate data and troubleshoot issues quickly, eliminating guesswork about data formats or field availability.

Practical examples are far more useful than abstract ones. Integration guides tailored to real-world workflows – like creating a rental calculator, building a property search tool, or implementing a lead scoring system – help developers connect API responses directly to their business needs. Transparency is also crucial; clear rate limits and published performance metrics allow engineering teams to design systems that avoid bottlenecks.

Modern proptech APIs go beyond just delivering raw data. Many now include AI-powered endpoints that provide advanced analytics, such as renovation ROI, price flexibility scores, or investment potential, directly through the API. This shift from bulk data delivery to real-time REST APIs means developers can embed property intelligence into their applications without the need for complex data pipelines. For large-scale analytics or machine learning, cloud-native delivery to platforms like Snowflake, BigQuery, or Databricks eliminates the need for extensive ETL (Extract, Transform, Load) processes entirely.

"What used to take 30 minutes now takes 30 seconds. BatchData makes our platform superhuman."

– Chris Finck, Director of Product Management, BatchData

The Developer Journey for New Features

Optimized DX removes friction at every step of integrating new features. Here’s a closer look at the typical journey:

The process starts with authentication setup, where developers obtain API credentials and configure secure access. With well-designed systems, this step can be completed in minutes rather than days.

Next, developers explore available endpoints to find the property attributes they need. With access to over 700 attributes spanning 155 million U.S. properties, clear documentation and interactive tools are essential for testing API calls, verifying response formats, and ensuring the data meets their requirements before coding begins.

During integration, official SDKs simplify the process by handling tasks like authentication, request formatting, and error management automatically. For applications targeting the U.S. market, integrating compliance features – such as ADA accessibility, CCPA privacy controls, and Fair Housing Act compliance – early on helps avoid costly revisions later.

Once integration is complete, developers move on to testing and validation. This involves verifying data accuracy, managing edge cases, and ensuring the system performs reliably. Finally, during production deployment, teams monitor API usage, manage rate limits, and set up alerts for errors or performance issues. While simple features may go from concept to production in minutes, more complex systems can take days. Poor DX, however, can drag this timeline out significantly.

High-Friction vs Optimized DX

The contrast between a high-friction and an optimized developer experience is stark, with measurable benefits for the latter. Optimized systems provide instant API keys and interactive testing environments, while legacy platforms often rely on manual approval processes and outdated PDF documentation.

For example, with an optimized DX, developers can make a successful first API call within minutes using pre-built SDKs and clear examples. In contrast, high-friction systems can leave developers stuck troubleshooting for weeks, wrestling with vague error messages and poorly documented edge cases. Context-aware error codes tailored to real estate scenarios allow developers to quickly diagnose and resolve issues, whereas generic HTTP errors often lead to frustrating delays and support tickets.

| Feature | Optimized DX | High-Friction DX |

|---|---|---|

| Onboarding | Instant API key and interactive testing | Manual approval and static PDF documentation |

| Integration Time | Minutes to first successful call | Days or weeks of troubleshooting |

| Testing | Free sandbox/staging environments | Testing against live production data |

| Error Handling | Context-aware, real estate–specific codes | Vague, generic HTTP error messages |

Building Scalable Data-Driven Systems

Creating scalable proptech systems starts with making smart architectural choices early on. While 61% of commercial real estate firms still rely on outdated technology infrastructures, modern proptech demands systems that can handle real-time responsiveness while processing massive datasets for analytics and machine learning. Just as a solid foundation is essential for developer experience, it’s also the backbone of proptech innovation.

At the heart of scalable proptech lies effective property identity management. In the U.S., real estate data is fragmented across MLS systems, county records, and tax assessors. Without a reliable identifier strategy – such as using APNs (Assessor Parcel Numbers) or geocodes – linking data across providers becomes a challenge, especially when managing datasets that span 155 million properties. Even a small error rate of 1% could lead to 1.5 million mismatched records, making consistency crucial as your platform grows.

A well-designed system can also significantly cut costs and improve returns. For example, AvalonBay reduced hosting expenses by 40% and increased returns by 25%. Choosing the right data delivery method for each use case is a major part of this. Real-time REST APIs are ideal for on-demand lookups and user-facing features, offering sub-second responses for tasks like property searches or contact enrichment. For large-scale analytics, machine learning, or business intelligence, direct cloud access to platforms like Snowflake or BigQuery eliminates the need for traditional ETL processes. These foundational decisions pave the way for modular architectures and efficient data modeling.

Reference Architectures for Proptech

Modern proptech systems often rely on modular microservices architectures, which divide functionality into independent services such as accounting, identity management, property data, and payments. This structure allows for scaling individual components as needed. For instance, during open-house weekends when traffic can spike 5–10x, the property search service can scale up without disrupting other critical services like payment processing or user authentication.

For rapid API development, many teams use Node.js, while Golang is preferred for compute-intensive tasks. A central API gateway can efficiently manage traffic across various applications, including agent portals, consumer search tools, and admin dashboards. GraphQL further optimizes front-end development by allowing teams to request only the specific property data they need.

When managing U.S. real estate portfolios across multiple states, region-aware architectures are essential. Different states have unique compliance requirements, such as California’s CCPA compared to other states’ privacy laws. Embedding regional ownership into your data model can improve latency and ensure compliance. Tools like Kafka or RabbitMQ enable event-driven coordination, ensuring that a failure in one region doesn’t disrupt workflows in another.

The data layer typically includes two main components: data lakes for raw data used in machine learning and columnar warehouses like Snowflake, BigQuery, or Databricks for structured data that powers business analytics. This separation allows data scientists to experiment with raw data while enabling business analysts to run optimized queries on structured datasets.

Data Modeling Best Practices for U.S. Proptech

Effective data modeling for U.S. real estate requires organizing schemas into distinct layers:

- Core Property Layer: Includes physical details like square footage, year built, and lot size.

- Ownership Layer: Tracks transaction history, current owners, and resolves corporate entities to link LLC-owned properties to individuals.

- Financials Layer: Covers mortgages, liens, tax assessments, and valuations.

- Market Data Layer: Includes MLS listings, days on market, and pricing trends.

Standardizing addresses to USPS-compliant formats ensures data quality and faster processing. Pairing this with stable identifiers like APNs or geocodes maintains accuracy across mapping and routing services.

Historical snapshots are key for data storage. Property valuations, tax assessments, and sale prices should be stored as time-versioned records, enabling trend analysis and market monitoring. Implementing entity resolution in the ownership layer also helps connect properties owned by LLCs to their true individual owners.

For bulk data delivery, Parquet files stored in the cloud are a smart choice. Columnar formats improve query performance and reduce costs compared to row-based formats. Given that comprehensive property models can include over 1,000 data points per parcel, efficient storage and retrieval are vital for enhancing user experience.

Maintaining data quality requires a mix of automated tests and human oversight. Automated tests should run with every data refresh, but human quality checks catch edge cases that algorithms might miss. For outreach features, compliance flags like Federal DNC (Do Not Call) lists and litigator scrubbing should be built into your data model to minimize legal risks.

Choosing Data Delivery Methods

The choice of data delivery method depends on your use case, and most systems use a mix of API-driven, bulk, and hybrid approaches.

- Real-time REST APIs are perfect for on-demand property intelligence in user-facing applications where sub-second latency matters. APIs allow you to retrieve specific data without downloading entire datasets.

- Bulk data delivery works well for foundational tasks like backfilling databases, training machine learning models, or large-scale market analysis. Modern bulk delivery methods, such as direct cloud sharing with platforms like Snowflake or BigQuery, eliminate the need for complex ETL pipelines. This reduces engineering effort and infrastructure costs.

- Hybrid architectures combine the strengths of both approaches. APIs can handle daily updates to keep records current, while bulk transfers maintain a large local dataset for business intelligence queries. This setup also ensures resilience by blending data from thousands of sources (over 3,200 in some cases), mitigating risks from localized outages.

| Delivery Method | Best Use Case | Latency | Engineering Effort | Infrastructure Cost |

|---|---|---|---|---|

| Real-Time REST API | On-demand lookups, user-facing apps, dynamic analytics | Sub-second | Low (Standard SDKs) | Pay-as-you-go per request |

| Bulk Data Delivery | ML training, BI dashboards, historical analysis | Scheduled/Batch | Low (Direct cloud sharing) | Storage-based licensing |

| Hybrid Approach | Real-time updates plus large-scale analytics | Variable | Medium | Combination of both |

The choice isn’t just technical – it’s also economic. API-driven systems offer predictable per-request costs, while bulk delivery involves licensing and storage fees. For scenarios requiring both real-time property searches and nightly market analysis across millions of records, a hybrid approach often provides the best value. Matching your delivery method to your specific usage patterns is key to optimizing costs and performance. Next, we’ll dive into strategies for scaling performance to complement these delivery methods.

sbb-itb-8058745

Scaling Performance and Operations

After discussing scalable architectures, let’s dive into the challenges and solutions for overcoming performance bottlenecks in U.S. proptech.

Common Bottlenecks in U.S. Proptech Scaling

One of the biggest hurdles in scaling proptech isn’t purely technical – it’s data silos and outdated systems. Essential deal data often ends up scattered across local drives or shared folders instead of being centralized in dedicated software. This leads to inaccurate records and a lack of real-time visibility. Teams frequently rely on manual processes to pull data from email, Excel, and Salesforce, creating significant inefficiencies when scaling operations.

On the technical front, N+1 API patterns cause major slowdowns. For instance, making 1,000 separate API calls for 1,000 properties results in rate limits and sluggish response times. Another issue is unstandardized data – comparing metrics across sources often requires extensive manual effort when data isn’t standardized from the start. This directly affects operational performance. Even with advanced systems in place, offline data sources remain a pain point. Government-held data that isn’t digitized adds hours of manual effort to processes.

"One critical point to note is that whilst we have come a long way, data is still a bottleneck for efficiency gains. In particular, government institutions holding data offline introduces friction and man-hours to the process."

- Alex Stroud, Analyst, Concentric

These challenges highlight the need for targeted strategies to improve performance.

Performance Strategies for Large-Scale Systems

Caching is one of the most effective ways to tackle performance issues. Tools like Redis or Memcached can store frequently accessed data – such as property images, tenant details, and market stats – allowing for near-instantaneous loading without repeatedly querying your database. Browser caching can handle static assets, while server-side caching is ideal for dynamic content.

Asynchronous loading is another key strategy. By fetching essential data (like address, price, and primary images) in real time, and loading additional details (like tax history or ownership records) asynchronously, you can keep your interface responsive even when dealing with large datasets.

To handle peak usage, consider auto-scaling your property search services. Pair this with Content Delivery Networks (CDNs) to reduce latency by serving assets from geographically distributed servers. This ensures fast delivery, no matter where your users are located.

For better efficiency, switch from ad-hoc data pulls to structured pipelines. A well-designed deal management platform can cut data aggregation time from hours to just minutes. Features like conditional checklists and automated workflows can move deals through stages seamlessly – for example, automatically routing a deal to a VP once all required data is gathered.

Ad-hoc Pulls vs. Designed Pipelines

Choosing the right data retrieval method can significantly impact your system’s scalability and efficiency. Ad-hoc API pulls work well for transactional needs, offering quick responses for single-property data. However, using this approach for large-scale analysis – like making millions of API calls – can create unnecessary overhead and risk hitting rate limits.

On the other hand, designed pipelines are better suited for large-scale operations. Using tools like Snowflake, BigQuery, or Parquet files on S3, you can bypass traditional ETL processes entirely. This "No-ETL" approach allows standardized data to flow directly into your warehouse, handling billions of records without the constraints of API rate limits. For tasks like training machine learning models or running complex analytics, cloud pipelines provide higher throughput and lower costs per record.

| Strategy | Implementation Method | Primary Benefit |

|---|---|---|

| Performance Optimization | Asynchronous Data Loading | Prevents UI freezing during heavy data fetches |

| Scalability | Cloud Data Sharing (Snowflake/BigQuery) | Handles billions of records without API rate limits |

| Reliability | CI/CD Pipelines & Automated Testing | Ensures consistent quality across distributed teams |

| Latency Reduction | Redis/Memcached Caching | Delivers near-instantaneous loading for repetitive content |

The financial benefits are clear – 59% of companies outsource development to save costs, and 78% report better outcomes when structured processes like CI/CD and automated testing are implemented. Your choice of pipeline design should align with your operational needs: real-time APIs for user-facing features, bulk data delivery for analytics, or a hybrid approach for systems requiring both. This decision will shape your system’s performance, infrastructure costs, and engineering effort for years to come.

Preparing for Future Proptech Trends

The real estate tech world is evolving rapidly, moving beyond simple automation to embrace autonomous systems. A key concept shaping this future is "PropOS" – a property operating system powered by AI agents, digital twins, and integrated data layers that enhance existing platforms. This marks a transformative shift in how proptech operates.

Emerging Trends in Proptech Development

With scalable architectures and optimized developer experiences (DX) at the core, the next wave of proptech innovation is redefining how the industry approaches operational intelligence. These trends aren’t just about improving efficiency – they’re about reshaping what’s possible.

Agentic architectures are replacing basic chatbots with autonomous AI agents capable of perceiving, learning, and acting independently. These advanced systems are already delivering results, cutting lead-to-lease timelines by 65% and increasing conversion rates by 8%.

Digital twins and computer vision bring real-world properties to life in 3D simulations, providing real-time insights into asset behavior. This technology enables remote property assessments with 98% accuracy, potentially reducing renovation timelines by six months. It also delivers tangible benefits like up to 30% energy savings and extended hardware lifespans by at least a year.

Verified communication data is now essential for both compliance and efficiency, safeguarding against fraud and litigation. Advanced tools for contact enrichment are achieving a 76% accuracy rate for right-party contacts – three times the industry average. Proptech adoption has surged, with 96% of investors noting that the pandemic accelerated the sector’s technological progress.

"Proptech’s trajectory points toward a propOS combining autonomous agents managing routine operations, digital twins providing real-time monitoring and simulation, and generative AI exploring solution spaces at superhuman speeds."

- Greg Lindsay, Author/Consultant, PwC/ULI

Future-Proofing with Custom Solutions

As previously mentioned, flexible data delivery is a cornerstone of modern proptech. Future-proofing builds on this by ensuring systems are resilient and adaptable to ever-evolving technology landscapes.

To achieve this, multi-source resilience is key. Platforms pulling data from thousands of sources – such as over 3,200 data streams – can avoid disruptions caused by county-level outages. This ensures continuous data flow and eliminates single points of failure that could otherwise cripple operations.

Flexible data delivery is another critical factor. Your architecture should accommodate various consumption methods: RESTful APIs for quick, low-latency lookups in user-facing applications, and bulk data delivery through platforms like Snowflake or AWS S3 for training machine learning models or performing large-scale analytics. This flexibility allows you to adapt to changing product needs without overhauling your infrastructure.

Professional services can also speed up development. "Data concierge" services can audit your existing systems, normalize raw data, and create reliable pipelines that integrate seamlessly into your tech stack. Automated compliance tools – such as real-time phone and address verification – help keep databases clean and protect your outreach efforts from legal risks.

"The question isn’t whether buildings will drive themselves, but how quickly the industry will learn how to steer them."

- Greg Lindsay, Author/Consultant, PwC/ULI

Conclusion: Your Blueprint for Faster Proptech Development

Building competitive proptech solutions in the U.S. requires a strong foundation based on three key elements: quick developer integration, precise data intelligence, and scalable architecture. By partnering with a data provider that offers normalization, enrichment, and performance guarantees for over 155 million U.S. properties, your team can focus on creating engaging user experiences instead of wrestling with complex ETL processes or messy data inconsistencies. As outlined earlier, streamlined data handling allows your team to innovate more efficiently.

These core elements deliver tangible results. Clear documentation and sandbox environments help developers roll out features in weeks rather than months. Clean, standardized property data ensures consistent product performance across all U.S. markets. Additionally, reliable APIs keep search and detail pages running smoothly, even during high-traffic periods, which directly boosts user retention and conversion rates.

Strategic infrastructure decisions can further accelerate timelines and reduce the need for reengineering. For example, combining real-time APIs for user-facing queries with bulk data delivery for analytics can support both interactive experiences and machine learning initiatives. Accessing data from over 3,200 streams ensures your systems remain resilient, even during localized outages. Professional services, such as data audits and pipeline design, can also turn months of internal work into just a few weeks, enabling you to seize market opportunities without delay.

The proptech industry is rapidly advancing toward technologies like autonomous agents, digital twins, and AI-powered workflows. Teams that prioritize dependable, developer-friendly data platforms today will be better positioned to iterate quickly, launch smarter features, and scale effectively as the market evolves. To stay ahead, audit your integrations, test in sandbox environments, and ensure your technical strategies align with your business goals for faster, more dependable growth in proptech.

FAQs

What types of data are essential for PropTech solutions?

PropTech platforms rely on a variety of data categories to generate precise and actionable property insights. Here’s a breakdown of the key types of information they use:

- Property details: This includes essential attributes like the number of bedrooms and bathrooms, square footage, lot size, zoning classifications, and construction specifics (such as roof type, HVAC systems, and parking). Legal identifiers like Assessor’s Parcel Numbers (APN) are also part of this category.

- Ownership information: Data on property owners is critical. This covers names, mailing addresses, ownership history, absentee-owner designations, and whether the owner is an individual or a corporation.

- Contact data: Reliable communication is made possible with verified phone numbers, email addresses, reachability scores, and compliance with Do Not Call (DNC) regulations.

- Valuation and equity: Insights into property value estimates, equity levels, loan-to-value (LTV) ratios, and rental income estimates help guide pricing and investment decisions.

- Financing details: Information on mortgages and liens, such as loan amounts, interest rates, lender details, and refinance history, provides a clear picture of financial structures and obligations.

- Market status: Pre-foreclosure and active listing data, including auction dates, unpaid balances, and foreclosure statuses, play a crucial role in identifying opportunities and tracking market trends.

By integrating these diverse data categories, PropTech solutions empower users to make quicker and more informed decisions when analyzing properties and navigating the real estate market.

What are the best practices for enhancing the developer experience with proptech APIs?

To get the most out of proptech APIs, it’s important to fine-tune your development process. Start by addressing latency issues – use cloud-native or load-balanced endpoints, and set up caching for data that’s accessed often. This helps ensure your application runs faster and more efficiently. Equally important is working with datasets that are clean and dependable, as this reduces errors and saves you valuable time.

Another key factor is choosing APIs with straightforward onboarding documentation and accessible, responsive support. These features can make your first API call quicker and help maintain consistent performance throughout your project. By focusing on these elements, you’ll not only speed up project timelines but also reduce bugs and deliver a better experience for your users.

What key trends in proptech should developers focus on?

The proptech industry is evolving rapidly, with three major trends redefining how developers build and innovate:

- AI-Powered Analytics: Artificial intelligence is revolutionizing how data is processed and understood. Tasks that once took days, like analyzing property images for investment potential or spotting market trends, can now be completed in minutes. This shift is enabling faster, smarter decision-making.

- Cloud-Native, Real-Time APIs: APIs hosted in the cloud are becoming indispensable. These tools offer instant access to up-to-date property and valuation data, while also being scalable. Whether you’re working on a small prototype or a large enterprise platform, these APIs make it easy to scale without a hitch.

- Improved Developer Experience (DX): The industry is moving beyond just offering raw data endpoints. Developers now expect tools that enhance their workflow, such as interactive documentation, built-in testing environments, and features that allow for quick "time-to-first-call." These improvements help reduce bugs, speed up integration, and accelerate product launches.

By staying ahead of these trends, developers can simplify their processes, cut down development time, and build solutions that drive meaningful results in the proptech space.