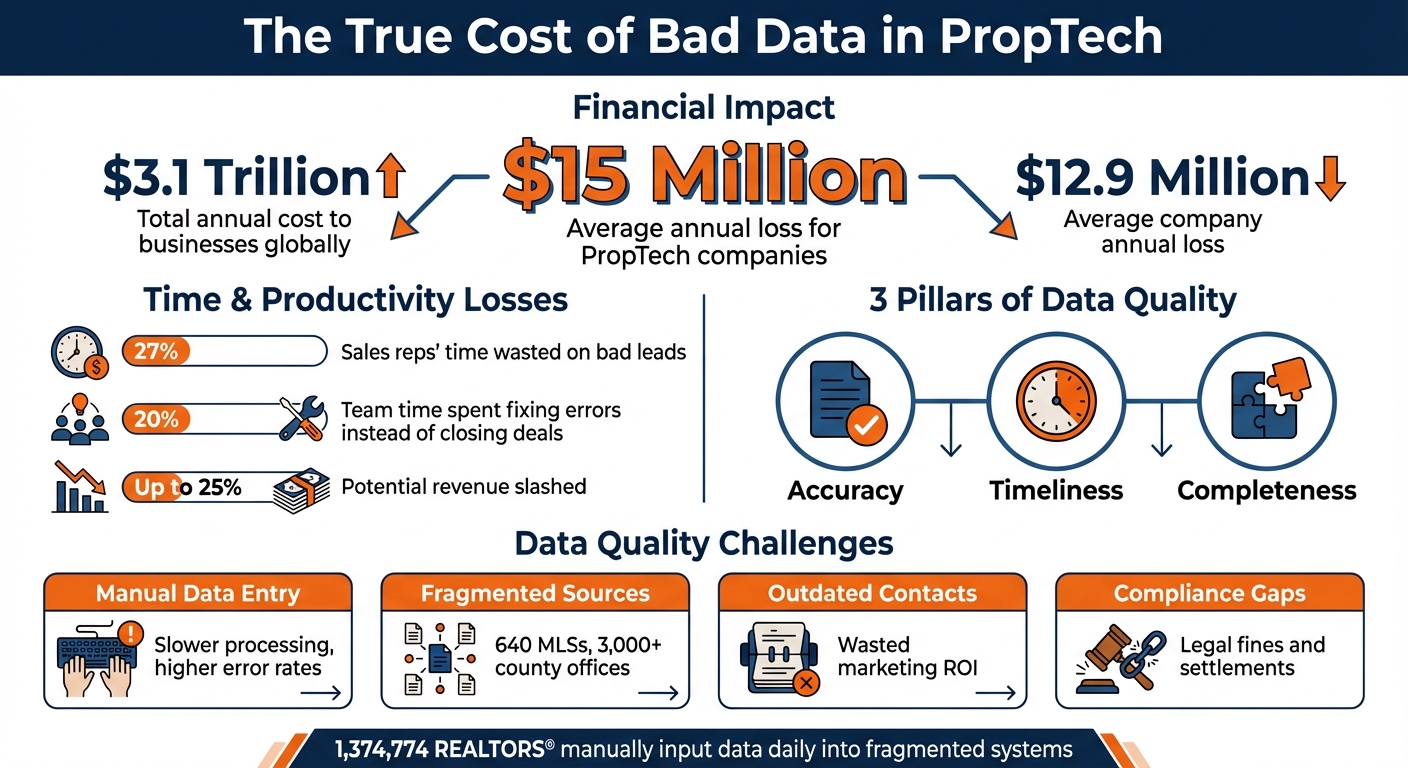

Bad data is expensive. PropTech companies lose an average of $15 million annually due to errors, outdated information, and inefficiencies. Inaccurate data wastes time, inflates costs, and damages decision-making – affecting everything from sales to compliance. For example, 27% of sales reps’ time is lost chasing bad leads, while outdated contact details and duplicate records inflate budgets for tools like CRMs.

Key Takeaways:

- Revenue Impact: Poor data can slash up to 25% of potential revenue.

- Operational Losses: Teams spend 20% of their time fixing errors instead of closing deals.

- Compliance Risks: Incomplete or incorrect data can lead to hefty fines under laws like GDPR or CCPA.

- Scalability Issues: AI tools amplify mistakes when fed bad data, creating large-scale problems.

The solution? Prioritize accuracy, timeliness, and completeness in your data. Companies like BatchData show how robust systems can reduce errors, verify contacts, and maintain up-to-date property records for better decision-making. In an industry where deals hinge on reliable information, investing in data quality isn’t optional – it’s the backbone of profitability.

The Cost of Poor Data Quality in PropTech: Key Statistics and Impacts

Getting Serious About Data Quality – RESO 2023 Fall

Core Dimensions of Data Quality

When it comes to understanding what makes data "high quality", three key dimensions come into play: accuracy, timeliness, and completeness. These elements are crucial in determining whether property data supports profitable decisions or leads to expensive errors. In the world of PropTech, where multiple MLS databases coexist, ensuring data quality becomes even more challenging.

The complexity is heightened by the fact that approximately 1,374,774 members of the National Association of REALTORS® manually input data into these fragmented systems every day. Without a robust framework for evaluating data quality, it’s hard to gauge whether your investment decisions are grounded in reliable information or shaky assumptions. As Datafold aptly puts it, "Having high quality data does not guarantee good insights, but having bad data almost certainly guarantees flawed conclusions and misguided decisions". Let’s take a closer look at how accuracy, timeliness, and completeness directly impact investment outcomes.

Accuracy: The Cornerstone of Trustworthy Data

Accurate data reflects reality as it is. In real estate, this means property records must include verified details such as the correct number of bedrooms and bathrooms, accurate square footage, and reliable owner contact information. Even minor errors – like spelling "Mapel" instead of "Maple" or mislabeling units as "Unit 1" versus "Unit 1A" – can disrupt record matching and distort market analysis. Worse, some agents intentionally manipulate data to bypass MLS rules, creating fake unit numbers like "2OJ" or "TH3L." These tactics can generate ghost inventory, skewing analytics and misleading investors about actual property availability.

Errors in property characteristics, often stemming from outdated town records, can also lead to inaccurate valuations. For instance, a property listed as having four bedrooms might actually include two illegal conversions without permits. Decisions based on such faulty information can result in significant financial missteps. That’s why measures like address verification and contact validation are so critical – they prevent wasted marketing dollars on disconnected phone numbers or undeliverable mail and ensure that searches don’t hit dead ends.

Timeliness: The Importance of Staying Current

Outdated information can significantly diminish its value. In fast-paced real estate markets, even a single day’s delay in listing updates can mean the difference between closing a deal and losing an opportunity. Regular updates on property transactions, ownership records, and market trends provide a competitive edge, helping investors identify motivated sellers and act swiftly .

While real-time data is ideal, it isn’t always essential for every scenario. For most property analyses, updates every 24 hours – or even every 30 minutes – are often sufficient. Today’s data systems continuously process information from over 3,200 sources, blending inputs to counteract outages at the county level and maintain a steady flow of updates.

Completeness: Bridging the Gaps

Incomplete data leaves room for uncertainty, hiding risks and opportunities. A property record that includes only basic details like an address and sale price offers limited insight. On the other hand, access to 700+ attributes – such as permits, liens, pre-foreclosure status, household demographics, and lifestyle preferences – can reveal distressed properties with untapped equity that basic listings might overlook .

Comprehensive data transforms property records from simple directories into powerful tools for strategic decision-making. For example, Crexi drastically reduced data processing time by incorporating detailed property owner data. According to Chris Finck, Director of Product Management at Crexi:

"What used to take 30 minutes now takes 30 seconds. BatchData makes our platform superhuman" .

Completeness also means having access to full transaction histories, not just the latest sale, and understanding lien balances rather than merely noting their existence. Modern PropTech platforms now provide over 1,000 unique data points per property, covering everything from construction details like HVAC systems and roof types to financial and lifestyle profiles of owners .

Common Data Quality Challenges in PropTech

Sources of Inaccuracies

The reliance on manual data entry in real estate creates a breeding ground for errors. From typos in street names to swapped digits in zip codes and misspelled unit numbers, these small mistakes can cause big headaches. They disrupt data matching, complicate geocoding, and slow down property searches, creating a ripple effect of challenges when integrating data across systems.

But not all errors are accidental. Intentional data manipulation is another hurdle, with some agents altering data to inflate inventory numbers artificially. This practice skews analytics, leading to unreliable insights.

Adding to the complexity, many property records still depend on outdated town assessor data – some of it untouched for decades. Without updates or verification, this legacy information often lacks accuracy. To make matters worse, there’s no universal agreement on basic definitions. For instance, what qualifies as "usable square footage" or a "full bath" can differ depending on the source. This lack of standardization introduces even more variation, making it harder to trust the data.

Data Fragmentation and Inconsistencies

Real estate data is notoriously fragmented. It’s scattered across 640 MLSs and over 3,000 county tax assessor offices, each with its own unique data structure and terminology. This fragmentation leads to what experts call "integration paralysis." Even with access to a wealth of information, companies struggle to merge it effectively. As ScrapeHero aptly put it, "The current state… is so distributed, uncoordinated, and chaotic that it is bound to be dirty and inconsistent".

The lack of standard formats further complicates things. For example, one jurisdiction might include finished basements when calculating square footage, while another only counts above-grade living areas. These inconsistencies can throw off financial models and even derail deals during due diligence. This is especially problematic for firms operating across regions with varying regulations and market norms. Without standardized frameworks, maintaining reliable PropTech data becomes a monumental task.

This disjointed data landscape doesn’t just create technical challenges – it directly impacts operational workflows and financial performance.

Impact on Business Operations

Poor data quality has a direct and costly impact on business operations. In fact, bad data costs businesses a staggering $3.1 trillion annually, with the average company losing about $12 million. For real estate professionals, this often translates into wasted hours cleaning up data instead of closing deals. Marketing teams, meanwhile, waste precious budget dollars on undeliverable mail or disconnected phone numbers.

Inaccurate property data also makes it harder to identify high-equity prospects or motivated sellers. Worse, contacting numbers listed on the National Do Not Call Registry or known litigators can lead to FCC investigations or lawsuits. These legal risks can far outweigh the costs of maintaining clean, reliable data.

Consider the time spent researching LLC-owned properties to find the actual owner – a task that could be automated with better data infrastructure. Without it, firms risk losing deals to competitors who can move faster. These operational inefficiencies highlight why investing in a strong data quality strategy isn’t just a nice-to-have – it’s essential for staying competitive.

| Data Challenge | Operational Impact | Financial Impact |

|---|---|---|

| Manual Data Entry | Slower lead processing; higher error rates | Increased labor costs; missed opportunities |

| Fragmented MLS Sources | Inconsistent property details (e.g., beds/baths) | Faulty valuations; poor investment decisions |

| Outdated Contact Info | Wasted time on "dead" leads | Reduced marketing ROI; potential spam issues |

| Compliance Gaps | Risk of FCC/DNC violations | Expensive legal settlements or fines |

sbb-itb-8058745

BatchData‘s Approach to Ensuring Data Quality

Property Data Enrichment and Verification

BatchData takes a thorough approach to maintaining data quality, combining automated testing with human oversight. The platform aggregates data from more than 3,200 sources – including MLSs, county assessor offices, and transaction records – to ensure reliability, even if some sources experience disruptions.

Each data run undergoes rigorous testing, with edge cases flagged for further human review. This dual-layer process helps BatchData manage a detailed catalog of over 1,000 data points for each of the 155 million property records in its system.

Address data is standardized using USPS-compliant rules, such as CASS (Coding Accuracy Support System) certification and Delivery Point Validation (DPV). This ensures that every address is accurate and deliverable. Property records are updated daily, with new recordings – like deeds, mortgages, and liens – typically added within 24 to 48 hours.

In addition to refining property records, BatchData focuses on accurately identifying the individuals associated with those records.

Skip Tracing and Contact Validation

Accurate property data is just the starting point. Identifying the actual person behind a property – especially when it’s owned by an LLC or trust – requires more advanced methods. BatchData’s skip tracing engine goes beyond basic lookups, connecting properties to the individual decision-makers behind corporate entities.

With a 76% right-party contact (RPC) rate, BatchData outperforms the industry average by nearly threefold. This success comes from cross-verifying contact data from over a dozen premium sources and leveraging real-time feedback from more than 20,000 users.

"Our data undergoes continuous validation from over a dozen sources to ensure top-tier right-party contact rates, which are currently nearly three times higher than the industry average." – BatchData

Real-time phone validation ensures that disconnected numbers are identified before they waste time and resources. The system also scrubs data against the National Do Not Call registry and flags known TCPA litigators using a Litigator Scrub, reducing the risk of legal issues. With access to over 258 million phone numbers and 100 million email addresses, BatchData covers more than 99% of the U.S. population.

This meticulous verification process ensures that outreach efforts are targeted and effective, minimizing risks and maximizing results.

API-Driven Validation for Easy Integration

BatchData’s REST API offers lightning-fast validation services, enabling real-time checks for addresses, phone numbers, and geocodes. This prevents inaccurate data from entering your CRM and streamlines multiple data needs – such as property attributes, skip tracing, and compliance scrubbing – into one unified interface.

The API is developer-friendly, featuring interactive documentation for testing endpoints and live responses. Official SDKs for Python and Node.js make integration straightforward. Features like address autocomplete suggest USPS-standardized addresses in real time, enhancing accuracy and efficiency. The API also includes a Phone Number Confidence Score, helping prioritize outreach to the most reachable leads. It cleans up duplicate records and invalid addresses, reducing wasted marketing dollars while improving user experience. For businesses with high-volume needs, enterprise SLAs ensure consistent performance, even during peak usage periods.

Proven Strategies for Maintaining Data Integrity

Implementing Data Governance Policies

To maintain accurate and reliable data, start by establishing strong data governance practices. Assigning clear roles, such as data stewards, ensures that specific individuals are responsible for keeping records accurate. Without clear accountability, data management often suffers from the "everyone’s job is no one’s job" dilemma, which can lead to errors and neglect.

Create a catalog for every data asset, including its origin, definition, and connections to other datasets. This approach, known as data lineage tracking, makes it much easier to trace errors back to their source and resolve issues before they spread.

Incorporate ongoing quality assurance processes that automatically profile, validate, and cleanse data. For example, in the real estate sector, where records are frequently updated with new deeds, mortgages, and ownership transfers, your governance policies should be designed to handle constant changes.

Using Validation and Monitoring Tools

Automated validation tools are essential for catching errors right at the point of data entry. For instance, real-time phone verification can flag disconnected numbers instantly, while address validation ensures accuracy as data is entered.

Leverage API-driven solutions to standardize and verify data in real time. These tools not only reduce human error during data entry but also help with compliance by automatically cross-checking contacts against Federal Do Not Call lists and databases of known litigators.

Instead of relying on periodic audits, implement continuous data monitoring. This is especially important for information that changes frequently, such as property valuations, tax assessments, and ownership records. Daily updates ensure your data stays current and accurate.

Standardizing your data framework across platforms further enhances its reliability and consistency.

Standardizing Data with RESO and Other Frameworks

The RESO Data Dictionary offers a standardized framework specifically designed for real estate data, with over 1,700 fields and 3,100 standardized lookups to ensure consistency across systems. By using standardized field names and definitions, you can facilitate seamless data sharing between platforms without the risk of translation errors.

Stick to a strict standardization policy and avoid making one-off exceptions to your data model. These exceptions can snowball over time, leading to inconsistent lookups and requiring your team to manage multiple conflicting datasets. By adhering to standardization, you not only improve data reliability but also boost operational efficiency.

Conclusion: Growth Through High-Quality Data

Organizations lose an average of $12.9 million annually due to poor data quality. On the flip side, having reliable and accurate data boosts revenue, enhances efficiency, and strengthens competitive positioning. This stark difference highlights just how impactful high-quality data can be for real estate operations.

To achieve this, it’s essential to focus on clear data governance with dedicated data stewards, automated validation and monitoring tools, and a standardized framework aligned with industry standards like RESO. BatchData exemplifies this approach by pulling from over 3,200 sources, offering 76% right-party contact accuracy (three times the industry average), and maintaining 99.99% uptime. With access to 155 million property records and over 1,000 unique data points, BatchData ensures new transaction data is available within 24-48 hours of recording – giving users a critical time advantage.

In the real estate world of 2026 and beyond, success won’t belong to those with the largest data sets. Instead, it will favor those who have accurate, timely, and actionable data. By consolidating data providers, automating validation at every touchpoint, and utilizing API-driven solutions, you can eliminate inefficiencies like outdated contacts and disconnected numbers that waste marketing budgets and slow decision-making.

Ultimately, competitive advantage isn’t about the amount of data you have – it’s about having complete confidence in it. When your team trusts the intelligence they’re working with, growth becomes inevitable.

FAQs

How does poor data quality affect revenue and efficiency in PropTech?

Poor data quality can take a serious toll on both revenue and efficiency in the PropTech world. When property details, ownership records, or contact information are inaccurate or incomplete, the ripple effects can be costly. Flawed analytics stemming from bad data often lead to mispriced properties, missed leasing opportunities, and expensive rework. The financial impact is clear: while poor data can shave off several percentage points of revenue, clean and accurate data has been shown to boost revenue by as much as 8%.

On the operational side, unreliable data creates headaches like duplicate records, manual corrections, and even compliance risks. These inefficiencies force teams to waste valuable time fixing errors instead of driving growth. By adopting tools for automated data cleaning and verification, PropTech companies can ensure their information stays accurate and up-to-date. This not only sharpens decision-making but also reduces wasted resources, paving the way for greater efficiency and profitability.

What are the key dimensions of data quality, and why do they matter?

Data quality hinges on eight key dimensions that ensure information is dependable and serves its intended purpose effectively:

- Accuracy: Data mirrors real-world facts and events without distortion.

- Completeness: All required fields and records are present, leaving no gaps.

- Consistency: Values remain uniform across different systems and datasets.

- Timeliness: Data is current and accessible when needed.

- Uniqueness: Duplicate entries are removed to avoid redundancy.

- Validity: Information adheres to established formats or rules.

- Integrity: Connections between data elements are preserved.

- Relevance: Data aligns with the specific needs of its purpose.

These dimensions are essential because low-quality data can create significant problems – think of incorrect pricing, missed business opportunities, or even compliance issues. For example, outdated or inconsistent data can lead to flawed decisions, while duplicates or broken relationships between data points can cause confusion and system errors. By addressing these dimensions, you can ensure your data is reliable, supporting smarter decisions and smoother operations.

What steps can PropTech companies take to ensure their data is accurate and complete?

Ensuring property data is accurate and complete starts with a well-structured validation process. First, confirm completeness by verifying that essential fields – like addresses, square footage, ownership details, and contact information – are fully filled out. Next, cross-check this information against trusted public sources, such as county registries, MLS feeds, or tax assessor databases, to spot and resolve any inconsistencies.

Regular data cleaning is equally important. This involves eliminating duplicates, standardizing formats (e.g., “123 Main St.” versus “123 Main Street”), and fixing errors to maintain consistency. Leveraging automation tools can make a big difference here, streamlining tasks like address standardization, email and phone verification, and geocoding. These tools not only save time but also enhance accuracy.

To keep data reliable over time, establish ongoing maintenance routines. Schedule periodic updates, monitor data in real time, and ensure compliance with regulations like FCRA, CCPA, and GDPR. By making data quality a measurable priority and embedding these processes into daily operations, PropTech companies can build trust and stand out with reliable, high-quality data.